How SBN Studios’ Premier Short Became AI Community’s Comedy Sensation

Breaking down the storytelling, strategy, and technical brilliance behind a 60‑second AI masterpiece

SBN Media Team

7/28/202512 min read

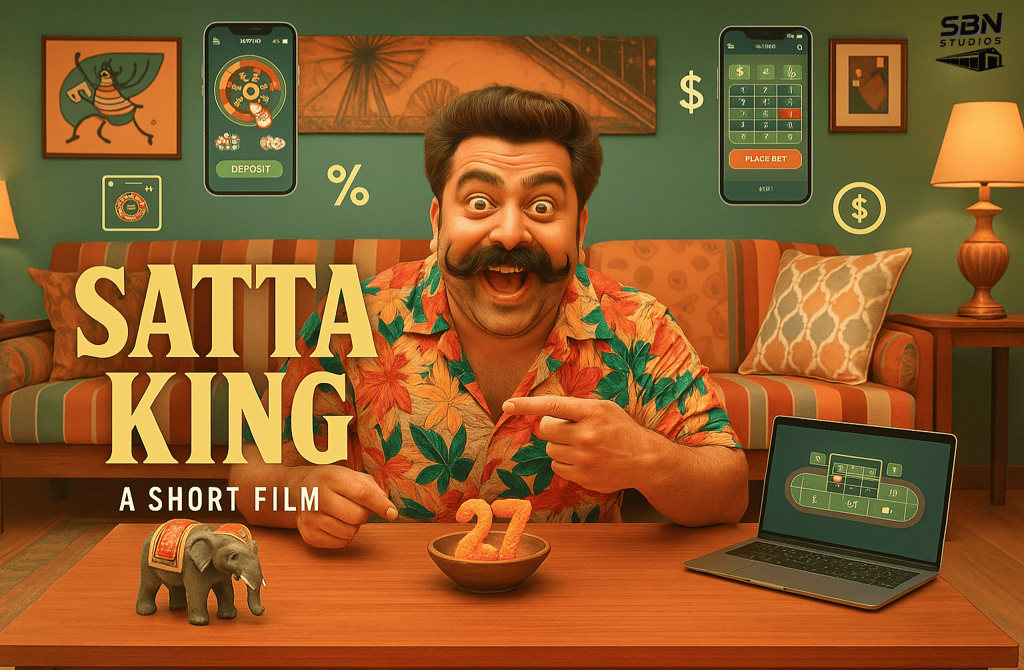

The era when AI could only create low-res, uncanny visuals is over. Today, artificial intelligence can generate truly realistic videos, not just short visual stunts but sequences with believable lighting, motion, physics, and character consistency. This shift from “glitchy experiment” to “practical creative tool” is what made Gourav Ghosh's short film, Satta King (produced by SBN Studios), break out inside the AI creator community. The short’s viral rise is bigger than a one-off hit, it is evidence that AI is now a practical tool for filmmakers, storytellers, and brands.

In blog we will do three things: 1) introduce the real, recent progress in AI-driven realistic video generation, 2) walk through the creative and technical choices behind Satta King, and 3) unpack what this means for filmmakers, brands, and storytellers going forward.

Watch the short film here:

AI can now generate realistic video — and that is a big deal!

Until very recently, AI-generated visuals were mainly still images or very short, experimental clips. Faces looked wrong, motion flickered, and continuity between frames was inconsistent. But the last two to three years brought an explosion of improvements, driven by stronger model architectures, larger training datasets, and new engineering techniques.

On the image side, diffusion models and transformer-based text encoders have driven huge quality gains. These models now produce images with far better photorealism, improved adherence to complex prompts, and far fewer anatomical errors. That means character portraits, background plates, and texture details can look convincingly “real” without a heavy VFX pipeline.

On the video side, new approaches treat video as a sequence of tokens or patches, refining frames iteratively to maintain motion coherence and physical realism. Leading systems now produce clips with consistent character appearances, correct object permanence, and coherent environmental effects like lighting shifts and natural camera motion. Some platforms even combine visual generation with synchronized audio: native dialogue, ambient sounds, and foley are becoming part of the same pipeline, which matters a lot for comedic timing and emotional beats.

Why this matters: When AI can produce believable visuals and synchronized sound, the technology becomes useful for storytelling, not just novelty. Filmmakers can prototype, iterate, and produce professional-looking sequences without the usual time and cost constraints. That practical potential is why Satta King’s community reaction was more than curiosity, it was recognition of a new creative language.

The short: concept and creative intent

Satta King was designed as a compact, character-led comedy, with two creative goals in mind:

1) Make a tight, funny short that lands on timing and performance.

2) Use AI as a creative department, not a gimmick, to accelerate production while preserving human taste and editorial control.

We wanted the short to be playful, slightly surreal, and obviously crafted with care. The concept needed to rely more on character beats and editing than on expensive stunts or large-scale choreography. That decision let the technology play to its strengths, while humans drove the comedic rhythm.

Key creative choices:

Keep scenes focused on a few central characters, so AI continuity could be sustained.

Use controlled lighting and clear silhouettes, which generative models handle better.

Stage jokes across simple beats rather than relying on single-frame chaos.

Emphasize editing and sound design to sell comic timing.

This mix of constraints and creative intent made it possible to use AI aggressively, while still delivering a short that felt emotionally and comically true.

How we used AI in the workflow — a practical breakdown

For this project, we combined multiple prompting methods to get the most out of the AI tools:

Zero-Shot Prompting – Giving the AI a direct task without any examples, which encouraged more unexpected and creative outputs.

Chain-of-Thought Prompting – Breaking down the sequence of steps in detail to maintain logical flow and ensure smooth scene continuity.

Multi-Shot Prompting – Providing several curated reference examples to refine tone, style, and maintain visual cohesion throughout the video.

This hybrid approach allowed us to balance AI’s creativity with human-directed precision, ensuring that every frame aligned with the client’s branding and narrative goals.

We treated AI like a department head that handles visuals and rough takes, while humans stayed in charge of story, editing, and performance nuance. The workflow had four repeatable phases:

Concept and script

A one-page script with a clear comic arc kept things nimble. Comedy is rhythm, and no amount of believable visuals will replace human judgement about beats and punchlines.Previsualization

We generated mood boards, color studies, and rough animatics with image-generation models. These early artifacts allowed the team to lock framing, color palettes, and camera concepts overnight instead of waiting days or weeks for traditional VFX.Production (AI-assisted)

Here we asked the AI to do what it does well: background plates, alternate costume textures, crowd fills, and many “takes” with small variations. For character performance we blended AI-generated footage with human-sourced voice or motion references when needed. That combination kept expressions watchable and the emotional core intact.Post-production and sound

Human editors assembled the strongest AI-generated segments, stabilized odd artifacts, refined pacing, and layered authentic foley and music. Even when a model provided native audio, we used it as a draft and often replaced or enhanced it with human-recorded ADR and foley for cinematic clarity.

Two guiding rules were followed: keep camera language simple, because models struggle with ornate, complex moves, and avoid single frames that demand dozens of tiny, interacting elements, since those still trip generative continuity.

Technical takeaways for creators

If you are experimenting with AI-driven video, here are the practical lessons we learned, distilled for creators:

Use multimodal pipelines when possible. When visuals and audio come from the same engine, emotional match improves, and rough cuts are faster to assemble.

Combine still-image models with video models. Use image generators for high-resolution plates and texture work, and use video models for motion continuity. That gives you the best of both worlds.

Be obsessive about prompt repetition. Character consistency depends on using the same seeds, reference images, and precise wording across prompts. Small wording changes can alter faces or body proportions.

Human editing remains essential. Models still produce micro-flaws: jittery wrists, minor lighting flicker, or unnatural hand poses. Treat AI output as raw footage and fix it in edit.

Document your pipeline. Save prompts, versions, and settings. That reproducibility helps you and the community iterate faster.

Mind ethics and rights. Datasets and likeness rights matter. Use models that respect copyright, and be transparent with your audience about what is synthetic.

Why Satta King resonated with the AI community

The film’s reception came down to three factors:

Visible, clever use of technology. We did not hide the AI contributions. Instead, we leaned into them as part of the joke. That honesty built trust.

Respect for comedic craft. The laugh beats were human-edited. AI supplied visual variety, but humans kept timing, pacing, and rhythm intact.

Reproducibility. Creators could see how we approached prompts and editing, and then riff on the format. When a short acts like a template, creators remix it, iterate quickly, and that spreads virality.

Creators responded because they saw something they could reasonably reproduce with their own tools and taste. The short did not feel like a locked-off studio experiment, it felt like a community project with room to impersonate, parody, and expand.

Broader implications for filmmakers, brands, and storytellers

The democratization of high-quality visuals changes the rules for production and storytelling.

Cost and access

Scenes once requiring large crews or exotic locations can be approximated at a fraction of the cost. Indie filmmakers and small brands can now access visuals and production value that used to be locked inside studio budgets.

Speed of ideation

Directors and content teams can ideate and test looks overnight. That speed is invaluable for social-first campaigns, rapid-response marketing, and iterative storytelling.

New roles and skill sets

Expect credits like prompt designer, AI continuity editor, and model compositor to appear in production lists. Filmmaking will fragment into more hybrid roles that mix classical craft with prompt engineering and model orchestration.

Creative expansion

We will see new formats: micro-sagas designed to be rapidly iterated, serialized AI-native comedy that riffs on memes in near real time, and interactive shorts that respond to audience inputs.

However, limitations persist. Long-form continuity across feature-length narratives remains a technical hurdle, complex object-to-object interactions can confuse models, and nuanced micro-expressions for emotionally dense scenes still favor human actors. For the foreseeable future, hybrid workflows that mix AI with live performance and human direction produce the best results.

Concrete lessons — a short playbook

Start small and character-driven, then scale.

Prototype publicly to gather community input and build momentum.

Put craft first: comedy is edited, not generated.

Store every prompt and version: reproducibility matters.

Be transparent about synthetic content and respect rights and ethics.

A slightly deeper technical note (without the jargon)

Two model families fueled this leap: advanced diffusion models for images, and transformer-diffusion hybrids for video. Image models now generate detailed, high-resolution stills with good texture and color. Video models treat short clips as sequences of smaller data tokens, refining them so movement and spatial relationships make sense across frames.

Where previous generation attempts often lost the identity of a character across frames, newer approaches keep that identity coherent. Some systems now even simulate believable physics, so objects interact in ways that look natural. These technical steps deliver one practical result: creators no longer need to assemble many disparate tools and hope for consistency. Instead, an orchestrated multimodal pipeline can produce a unified visual and audio draft fast enough to enable true creative iteration.

Where this is headed

The rate of improvement is rapid. Expect progress along these fronts:

Longer clips: Models will get better at sustaining character and story across longer durations.

Real-time rendering: Live, near-instant generation will enable new interactive formats.

Industry-specific tools: Specialized models will appear for particular genres, like product commercials, news-style pieces, or particular cinematic looks.

Better audio integration: Native, polished dialogue and foley will reduce the need for extensive ADR.

For brands, that means rapid, low-cost storytelling at scale. For filmmakers, it means new creative tools to visualize ideas previously unattainable. And for audiences, it means richer digital-first content, often produced by smaller teams with bigger imaginations.

Tech amplifies taste

Satta King was not proof that AI will replace directors, actors, or editors. Rather, it showed that technology can amplify craft. We used AI to create visual variety, accelerate iteration, and explore bolder frames. We used human taste to select takes, trim timing, and invest comic beats with emotion. The short’s reception confirmed a single, simple truth: when technology and craft collaborate, the output feels alive.

If you are a filmmaker, brand marketer, or creative lead interested in this space, start with a clear, simple idea that plays to what current models do well: short scenes, tight character beats, and strong editorial taste. Build hybrid workflows that keep humans in charge of pacing and emotional decisions. Document your process and share it, because the community learns fast, and remix culture drives improvements.

Satta King sparked a community conversation. Now it is your turn to take the spark and build something funny, strange, or beautiful. Tell us when you do, we want to see the next community sensation.

—

Practical prompting strategy that actually works

Many creators ask what to write in a prompt to get stable, believable results. A simple template keeps things consistent without turning prompts into novels. Try person, place, period, palette, and purpose. For example: “young shopkeeper in a crowded market, Mumbai at dusk, late monsoon season, warm sodium-vapor palette, 35 mm lens look, shallow depth of field, gentle handheld movement, intended for a light comedic beat.” This structure ensures you specify character, environment, time context, color mood, lens feel, and the emotional intent for the moment. You can add a brief “quality” line if needed, like “natural skin texture, modest contrast, soft highlights,” but stop before the prompt becomes a dump of adjectives that conflict with one another.

1) Use negative prompts sparingly to remove unwanted quirks, like mangled text on signs or extra fingers, but avoid long lists that fight the model. A short, high-impact negative list is easier to maintain. Lock seeds whenever you want repeatable output for the same character or prop, and save reference images so you can re-target the same face, costume, or environment settings later.

2) Keeping characters consistent across shots. Character drift is still the number one immersion killer. Three habits prevent it most of the time. First, use the same seed and a small library of reference stills for that character. Second, write a short, repeatable identity block in the prompt, including age range, hair length, facial hair, and a signature wardrobe element that acts as an anchor, such as a red scarf or a silver watch. Third, avoid over-specifying the face in new wording, since synonyms can pull the model toward a similar, but different, person. If you do need a change, change one thing at a time, for example hair length, and keep the rest of the identity block untouched.

3) Managing motion, physics, and camera language is paramount. Motion realism improves when the camera grammar is simple and deliberate. Fewer whip pans and fewer complex dollies equal fewer artifacts. If a shot demands fast action, generate a calm base pass first, then a second version with more energy, and choose the one that holds up in edit. For interactions, keep the number of moving pieces low. If a gag needs spilled water, a swinging door, and three people crossing, split the beat into two simpler shots, then intercut. The audience experiences smooth cause and effect, and you avoid compounding model errors.

4) For physics, watch contact points. Hands on tables, feet on stairs, objects passing behind others, and reflections in glass will reveal whether the scene is grounded. If something looks floaty, adjust the prompt with weight verbs, such as “leans into the counter,” “rests elbows firmly,” or “footfalls with slight bounce on rubber floor,” which often nudge the model toward better physical cues.

5) Approach color management and grading in such a wayt that they unify mixed sources. Treat AI output like footage from a new, slightly eccentric camera. Build a neutral baseline first, then apply a look. A clean workflow is: normalize exposure, fix white balance, reduce extreme local contrast, then add a gentle film-like curve and a subtle grain plate to glue shots together. If you prefer LUTs, place them after you normalize, not before. Keep saturation under control, as many models over-saturate reds and cyans by default. A pinch of halation, gate weave, or film grain can hide tiny temporal artifacts and help mixed sources feel like one world.

6) Audio, voice, and comedic timing: Even when a generator supplies audio, think of it as a storyboard for your ears. Replace temp dialogue with clean voice work and layer foley that matches footsteps, fabric swishes, and object touches. Comedy benefits from rhythm more than from loudness. Use silence and breathable pauses to set up jokes, then hit the cut on the first frame of the reaction, not on the last syllable of the line. Keep music simple and supportive. If the cue competes with a punchline, lower it or remove it during the gag, then bring it back after the laugh lands.

7) Quality control checklist before you publish: Run a fast pass through this list on every cut. Hands and fingers, eye lines, shadow direction, reflections, occlusions when someone walks behind another person, consistency of jewelry or badges between shots, text legibility on signs, lip sync with plosives and fricatives, and background repetition that may look like a tiled texture. Stabilize any micro-jitters and fix cadence issues that make motion look like it is stuttering. If a shot cannot be saved, replace it with a reaction shot or a cutaway. There is always a cleaner angle that serves the joke.

8) Ethics, consent, and disclosure: Treat likeness and brand marks with care. Do not use a living person’s face or a distinctive trademark without permission. Avoid training or fine-tuning on data you do not have rights to use. If a platform embeds provenance metadata or a watermark, leave it intact. Finally, be transparent with collaborators and clients about which parts are synthetic. Clear communication reduces confusion and sets accurate expectations about what can, and cannot, be changed late in the process.

9) Pitfalls to avoid: Overstuffed prompts that mix too many ideas often produce bland, average-looking scenes. Calibrate specificity. Aim for the minimum words that fully define the shot. Watch aspect ratios that are far from standard delivery; odd ratios can make composition unpredictable and complicate later crops. Resist the temptation to oversharpen or denoise aggressively, which can create plastic skin and crisp halos around edges. Keep a light touch. Let the edit and performances carry the weight.

---

Opinion: the creative edge still lives in the edit

Technology lowers barriers, but it does not invent taste. The editor’s job is still to decide what the audience feels, and when. Good comedy hides in the exact frame you cut on, the breath you leave in, the glance you hold for a beat longer than expected. AI gives you more takes than you could afford to shoot, and more looks than you could build in a day. The art is to choose the one that serves the moment, then get out of the way of the joke.

A tool-agnostic pipeline you can copy and adapt

Preproduction: write a one-pager, collect references, and define a limited color world. Previz: generate still boards for key beats, then a low-fidelity animatic for timing. Generation passes: produce clean plates, then character passes, then any specialized action passes. Conform and edit: bring everything into your NLE, sync audio, and make the story work with the simplest possible cuts. Grade and mix: normalize, apply your look, and finish the sound so dialogue is clear and effects are tasteful. Master and archive: export delivery formats, keep your prompts and seeds with your project files, and label versions clearly for future reuse.

Publishing and community feedback

When you publish, add a short maker’s note that lists the tools involved, the areas where AI was used, and the parts that were traditionally crafted. Invite constructive feedback on what looked natural and what did not. Community notes become a free R and D loop. Over time you will build a stable of prompts and looks that become your signature, which is far more valuable than any single piece of software.

Where to focus next

If you have limited time to improve, invest it in three areas: better prompts that describe camera language and physical intent, stronger audio that supports, not smothers, the humor, and a reliable checklist that catches the small errors before the audience does. Those three areas compound quickly. With each project, your baseline quality rises, and iteration speed improves.

---

The tools will keep changing, but the fundamentals of story and performance remain. Use new capabilities to test bolder ideas, not to hide thin ideas behind busy visuals. Keep scenes simple, reactions honest, and edits clean. The audience cares about characters, not pipelines. If AI helps you get to the moment that makes them care or laugh, you are using it well.

Let’s Create Your Viral Story

Want to transform your ideas into share‑worthy micro‑dramas? Partner with SBN Studios today!

© Sixteen By NIne Media 2024. All rights reserved.

SBN Media | AI Video Studio & Corporate Film Production – Mumbai, India

Specialized in AI-powered corporate videos, brand films, product ads, and multilingual content