SBN Media’s Edge in AI Ad Film Innovation

SBN Media Team

1/16/202610 min read

Introduction: Stability Builds Trust

Adopting AI video for a brand strategy demands strict adherence to production criteria. In high-stakes advertising, consistency is everything. Logos must remain stable rather than shifting colours randomly, and actors must maintain identical features across different camera angles. However, maintaining this stability with AI requires a highly specialised skillset.

While AI tools offer incredible creative potential, maintaining stability over time is a complex craft. Without expert direction, features can shift, and faces can morph, creating a disconnect for the viewer.

At SBN Media, we possess the technical expertise to bridge this gap. We treat AI as a precision instrument. By applying the discipline of a traditional film studio to digital production, we create ad films where the character remains stable, emotional, and authentic from the first frame to the last.

What is AI Character Consistency (and Why Should You Care)?

AI Character Consistency is the technical ability to maintain a subject's identical facial features, body proportions, clothing, and voice across multiple shots, angles, and lighting conditions.

It is a sophisticated technical achievement that serves a critical psychological purpose.

The Psychology of Trust: Cognitive Ease vs. Strain

Why does this technical precision matter? According to consumer psychology, trust is built on predictability, a concept known as "Cognitive Ease." When a viewer sees a consistent face, their brain relaxes and focuses on the story.

However, when visual elements shift unexpectedly, it triggers "Cognitive Strain." The brain stops processing the message and starts processing the error.

Identity Verification: The human brain is wired to recognise faces instantly. If a face changes, even by 5%, the brain works overtime to re-identify the subject. This distraction kills your conversion rate.

Emotional Disconnect: Storytelling relies on empathy. Viewers bond with a character’s struggle or joy. If the character morphs, that bond is severed. You cannot empathise with a glitch.

Brand Integrity: Your visual assets are a direct reflection of your product quality. "Jittery" or morphing video implies a lack of attention to detail, which can damage your brand's reputation for quality.

By solving consistency, SBN Media allows brands to tell complex, emotional stories that feel human, even if they are born from code.

Why SBN Media Leads the Market: The "Filmmaker-First" Advantage

As detailed in our 2026 Guide to Ad Film Production in Mumbai, the video production sector is shifting. We are currently living through a "Content Crisis." Brands need 10x more video content for Instagram Reels, YouTube, and LinkedIn, but marketing budgets have remained flat.

Traditional shoots often require a production cycle of three to six weeks. SBN Media provides a high-efficiency alternative to meet modern demands. Here is why we are the leaders in Filmmaker-Led AI:

1. Creative Advantage (FTII Alumni Leadership)

Located in the heart of India's film industry, SBN Media combines technical capability with cinematic heritage. Our leadership team consists of alumni from the Film and Television Institute of India (FTII), ensuring that every project is driven by storytelling principles, not just software.

An algorithm processes data; a director crafts emotion. We apply traditional cinematic techniques such as lighting, blocking, and pacing to the digital workflow. For us, AI is simply a new creative tool, and our directors ensure the output has the soul of a film.

2. A Focus on Brand Guardianship

As production volumes increase, maintaining quality control is essential. We view ourselves not just as a production house, but as custodians of your visual identity. We operate under a strict Brand Safety Protocol designed to protect your brand’s image. This internal standard ensures that every frame meets broadcast specifications and aligns perfectly with your brand guidelines before it ever leaves our studio.

3. Precision in Performance and Continuity

True consistency means a character must remain recognisable while acting, moving, and expressing emotion throughout the ad. We apply this strict standard to every character on screen. Achieving this level of stability requires a fusion of core filmmaking knowledge and advanced prompt engineering. Our directors understand exactly how an emotion should look, and our engineers know how to code that technical instruction into the AI. This ensures that every smile, frown, and movement remains natural and stable from the opening scene to the final frame.

Inside the Workflow: How SBN Media Engineers Perfect Characters

Many people believe AI character creation is as simple as typing "A doctor in a white coat." It is not. That prompt produces a different doctor every time.

To achieve broadcast-level consistency, we developed a proprietary Character Engineering Workflow. This process mirrors the rigour of a professional casting call but operates at the speed of AI.

Phase 1: The Blueprint (Story & Anatomy)

A vague request leads to a vague result. Before we touch any AI tools, we sit with our directors, creative leads, and writers to define the character's DNA. We create a "Character Bible" that includes:

Physical Anatomy: We specify bone structure, jawline width, nose shape, and eye distance. This data is fed into the model as non-negotiable constraints.

Texture & Flaws: Real people have pores, scars, and asymmetries. We add these details to ground the character in reality. Perfection looks fake; flaws look human.

Psychology: Is the character anxious or confident? A tired mother holds her posture differently from an energetic intern. These details guide the AI on how to pose the model.

Wardrobe Lock: We define the exact fabric, cut, and colour of the clothing to prevent it from shifting between scenes. We create a "digital wardrobe" that makes sure a suit's lapel doesn't change width when the character turns.

Phase 2: Technical Character Definition

Once the visual description is approved, we translate it into precise data points.

Seed Locking: Every AI generation begins with a specific mathematical value known as a seed. Instead of letting the software choose randomly, we identify a specific seed that aligns with the character's design and lock it. This value acts as a digital fingerprint that maintains the foundational structure of the image across different scenes.

Negative Prompting We explicitly list what we do not want, such as plastic skin textures or shifting features. This creates a guardrail for the technology. It restricts the AI from making common errors and keeps the output within our strict quality parameters.

Reference Anchoring: We generate a Master Reference sheet that includes front, side, and angled views of the character. We program the AI to look at this master image for every subsequent shot. This process keeps the facial structure stable even when the camera angle changes.

Phase 3: The Production (Scene Generation)

The most challenging aspect of AI video is keeping the facial features stable while the character moves. We solve this by treating the AI generation as a controlled production process.

Prompt-Led Scene Construction: We generate the video scenes using our precise character data and locked settings. We do not rely on random generation. Instead, we use specific, layered prompts to direct the character's movement and action. This ensures that the character acts exactly according to the script while maintaining the visual identity we established in the previous phase.

Environmental Stability: Consistency applies to the room as well as the person. We focus on generating stable environments first and then placing the character within them. This guarantees that if the camera pans to the left, the door or window seen in the wide shot remains exactly where it should be in the close-up.

Phase 4: The Performance (Emotion and Speech)

A consistent face is not enough if the character acts like a robot. We focus on the nuances that make a performance feel human.

Phonetic Lip-Synchronisation: We map mouth movements strictly to the phonetics of the audio track. This creates natural speech patterns that align perfectly with the voice. We adjust specific parameters like jaw openness and mouth shape to match the nuances of the language, whether the script is in Hindi, English, or Marathi.

Micro-Expressions: We manually tune the eyes and brows to match the emotional sentiment of the script. Whether the scene requires empathy, surprise, or joy, we use expert prompting to confirm the character feels alive and engaged with the viewer.

Phase 5: Expert Editing and Polish (Post-Production)

The raw AI video is effectively a digital negative. The final polish comes from human expertise.

Colour Grading: We adjust the colour balance and exposure to confirm the lighting looks smooth and professional from the first frame to the last.

Sound Design: A video lacks impact without sound. We layer music, voice-overs, and sound effects to give the video real emotion and cinematic weight.

Case Studies: SBN Media in Action

We don't just talk about theory; we apply these workflows to solve complex business problems.

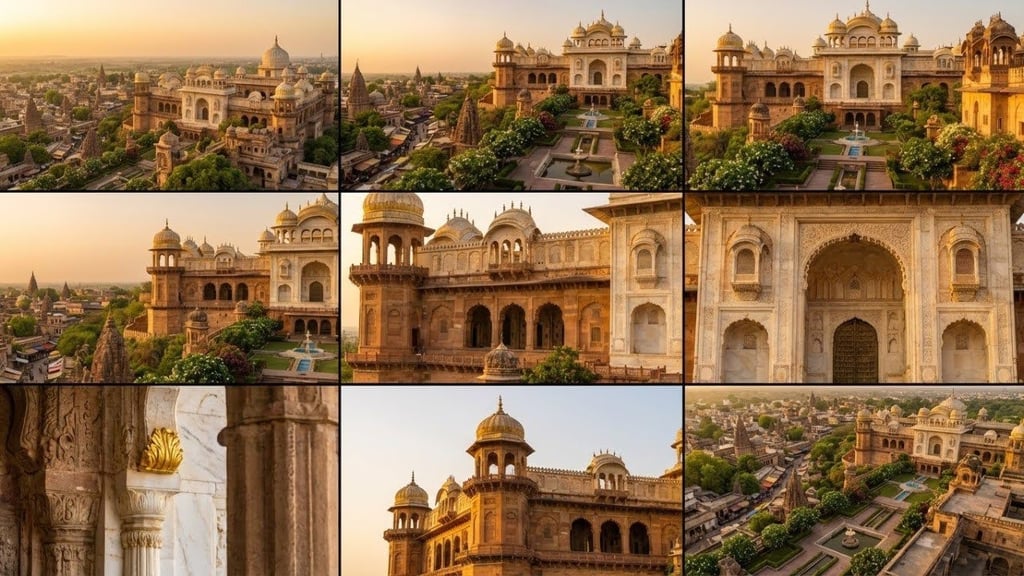

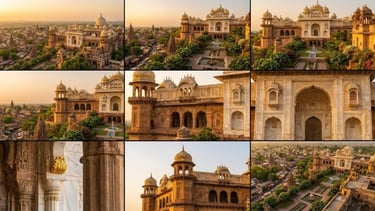

1. The BirlaNu Ad: Engineering Emotional Continuity

The Challenge: The script required a single protagonist, a painter, to appear across vastly different environments and lighting conditions. He needed to look identical in the dusty haze of a construction site, the colourful chaos of a Holi celebration, and the warm, flickering glow of Diwali. In standard AI video, changing the lighting or season usually causes the face to morph or lose resemblance.

The Solution: We engineered a Master Identity for the painter. By applying our strict character locking workflow, we ensured his facial structure, specifically his eyes, jawline, and skin texture, remained rigid even when covered in Holi powder or viewed from different camera angles. We prioritised his facial stability above all else to ensure his expressions of fatigue and joy translated perfectly.

The Result: A 66-second seamless narrative where the character’s identity never wavered. The audience connected with the person, not the technology, proving that perfect consistency is the key to emotional storytelling.

Read the full breakdown: AI Video Goes from Soulless to Stunning

2. The Zydus Lifesciences Ad: Optimising Empathy

The Challenge: The campaign featured three women from different age groups in intimate domestic settings. The script required them to transition from busy morning routines to quiet, reflective moments in front of a mirror. Standard AI often fails at this specific transition, causing the face in the reflection to look different from the face in the room. A glitch here would turn a sensitive health message into something unsettling.

The Solution: We engineered three distinct "Everyday Personas" and locked their facial data using our proprietary workflow. We paid special attention to the mirror sequences, using advanced control techniques to ensure the reflection was a perfect match to the character we established in the wide shots. We also stabilised their micro-expressions to ensure their hesitation and relief looked genuine, not robotic, across every frame.

The Result: A library of consistent, empathetic characters that viewers instinctively trusted. The visual stability ensured that the focus remained on the "Two Hands, Three Minutes" message, not the medium delivering it.

Read the full breakdown: Optimising Empathy – AI as the New Standard in Health Communication

SBN Media vs. Standard AI Tools

If you are deciding between a DIY approach and a professional partner, here is the difference in output.

Tips for Better Character Generation

If you are experimenting with AI for internal drafts or storyboards, use these tips from our prompt engineers to improve your results.

1. Be Specific About Bone Structure

Don't just say "beautiful woman." The AI has millions of definitions for beauty. Use descriptors like "high cheekbones," "soft jawline," "roman nose," or "almond-shaped eyes." Specific anatomy gives the AI fewer chances to guess and hallucinate.

2. Use a "Name" Anchor

Assigning a specific, unique name helps the AI model associate traits with a single entity. It acts as a shorthand for the character's look within that specific session.

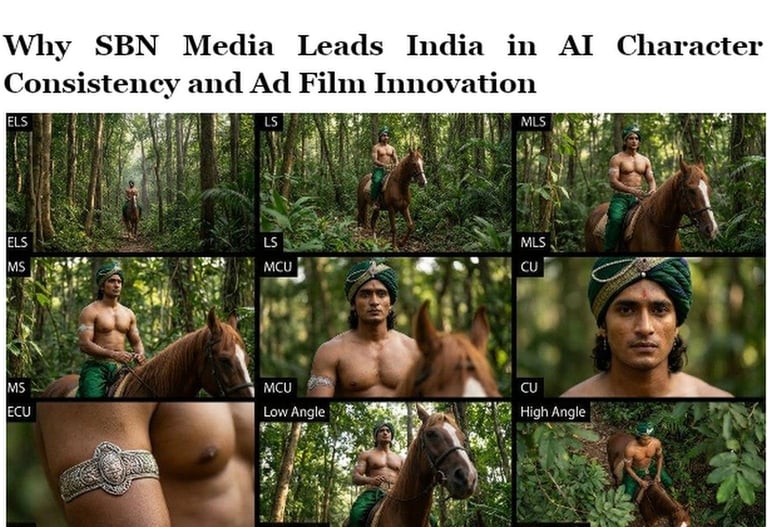

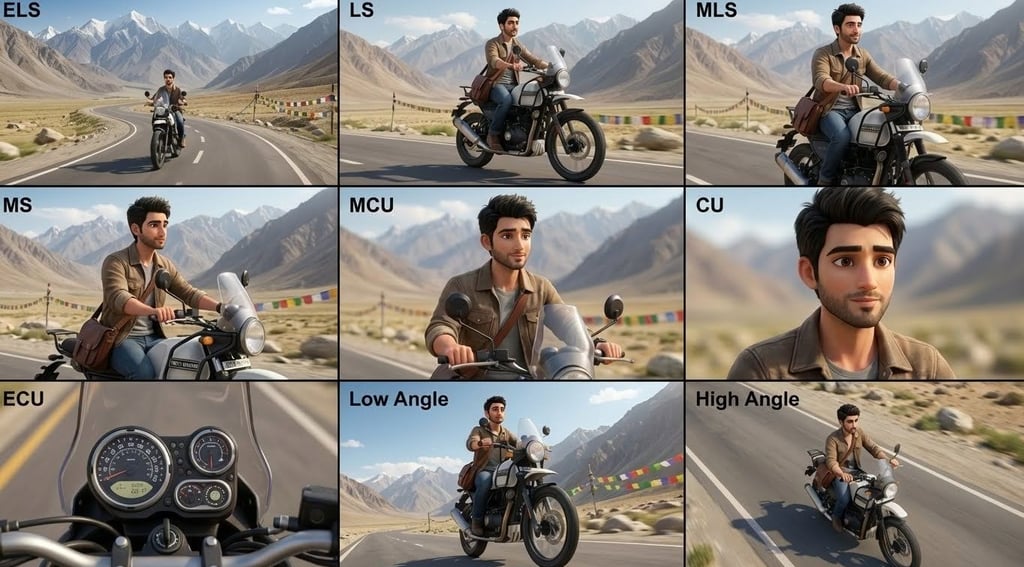

3. Test with a Grid

Before making a full video, generate a character sheet using the prompt below. If the character looks different in the grid, they will look different in the video.

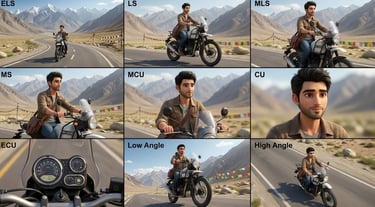

Use This Prompt: The SBN Consistency Check

Want to test if your AI tool can handle a consistent character? We use this specific prompt structure to create a "contact sheet" during our testing phase. It forces the model to render the same subject from multiple angles at once.

Copy and Paste this Prompt:

A professional 3x3 cinematic storyboard grid containing 9 panels.

The grid showcases the specific subjects/scene from the input image in a comprehensive range of focal lengths.

Top Row: Wide environmental shot, Full view, 3/4 cut.

Middle Row: Waist-up view, Chest-up view, Face/Front close-up.

Bottom Row: Macro detail, Low Angle, High Angle.

All frames feature photorealistic textures, consistent cinematic colour grading, and correct framing for the specific number of subjects or objects analysed. Lighting is [Insert Lighting Style, e.g., Soft Rembrandt]. Character is [Insert Your Name Anchor].

How to judge the result: Look at the nose and the eyebrows in the "Macro detail" vs. the "Wide shot." If they change shape, your character is not locked.

Frequently Asked Questions (FAQs)

Is AI video cheaper than filming with real actors?

Yes. While high-end AI production requires a budget for professional editing and engineering, it eliminates costs like location permits, travel, large crews, and actor fees. You save approximately 40-60% compared to a traditional live-action shoot of similar quality.

Can you make the AI character look like our CEO?

Absolutely. We can train the model on photos of a specific person to create a digital avatar. This allows your leadership to deliver messages in any language or setting without spending days on set. This is particularly useful for internal communications and training videos.

How long does it take to make an AI ad film?

A traditional ad film might take 4-6 weeks (casting, shooting, editing). At SBN Media, our AI workflow typically delivers broadcast-ready video in 2 days to 3 weeks, depending on the length of the video.

Will the audience know it is AI?

The goal is not to trick the audience, but to engage them. While trained eyes might spot AI elements, the general audience focuses on the story. When the emotion is real, the medium matters less. We believe in "Invisible AI," tech that serves the story, not the other way around.

What happens if I want to change the character's outfit later?

This is the beauty of AI. In traditional film, you would have to reshoot the entire scene. With SBN Media, we can use "In-Painting" to digitally swap the wardrobe while keeping the character's performance exactly the same. Revisions are faster and cheaper.

Absolute Character Consistency

The technology is here, but it requires a steady hand to guide it. Your brand deserves more than a glitchy, morphing avatar. It deserves a character with soul, consistency, and cinematic presence.

At SBN Media, we combine technical precision with cinematic storytelling to create characters that your audience will remember and trust.

Great content doesn’t wait. Neither should you.

SBN Media is a Mumbai-based creative studio where the discipline of traditional filmmaking meets the possibilities of generative AI. Led by FTII alumni, we partner with forward-thinking brands to craft visual narratives that are technologically advanced yet deeply human.

Let’s define the future of your visual identity together.

© Sixteen By NIne Media 2024. All rights reserved.

SBN Media | AI Video Studio & Corporate Film Production – Mumbai, India

Specialized in AI-powered corporate videos, brand films, product ads, and multilingual content